My first foray into software development when I was a kid was as a web developer. I don’t admit this to many people, but the first language I ever learned halfway decently was PHP. I led a confused childhood. After that, though, I was introduced to Ruby on Rails, and I’ve been using it ever since.

I don’t do web development professionally anymore, but I have a number of web-based side projects, making websites for friends or family. Most of the time, the people I work with are the brains of the operation, and I’m the brawn: they decide what they want, and I make it for them. Also, most of the time the people I’m working with are non-technical. So as I make progress on their project and want to obtain feedback, I’m rarely looking for feedback on my code (that’s always perfect anyway, of course). I want them to play around with the site itself.

There are some obvious ways to do that. We could meet in person and they could play with it on my laptop, or they could VPN in and access my local server remotely, or I could deploy to a staging server, and so on. These are fine solutions, but they’re synchronous and don’t scale well. Perhaps more importantly, they get in my way: they can only review essentially one thing at a time.

These projects are all using Rails 5, and are hosted on a private GitLab instance. While doing some research into how I might be able to move a bit faster, I read about GitLab’s Review Apps, and realized I’d hit a gold mine. This post documents how I got it working for my Rails projects in the hope that it will be useful to you. Review Apps

I was already using Gitlab CI to test my rails project, as well as deploy it to staging and production. Deploying requires the use of environments, and once you have those figured out review apps build naturally on top of them. Deploying is pretty cool: once the deploy finishes successfully, GitLab shows the URL you specified in the environment as a way to view the deployment, which makes things pretty seamless. Well, review apps are just like that, but the environment is actually dynamic. The environment name is dynamic, and the environment url is dynamic. These are typically based on the branch name. It means you get a separate deployment per branch, which means if you structure your project correctly, you can get nicely isolated deployments for every merge request you make, even at the same time.

Prerequisites

Review apps aren’t magical. You need the infrastructure to support them.

Ability to handle unique, branch-based URLs

Review apps will just assume that a URL it generates by combining the branch name with the base domain you specify actually points to the app in the branch. We’ll get into the technical details of this in a bit, but in my case, my URL looks like this:

url: http://$CI_COMMIT_REF_SLUG.example.com

So if I push up a branch named support_review_apps that will turn into http://support-review-apps.example.com. Review apps does nothing to ensure that exists, it just assumes it does. In my case, that means I needed to have a wildcard DNS entry for *.example.com. Since my registrar is 1&1 (who doesn’t support wildcard DNS entries), I started using Namecheap for my DNS. It’s pretty awesome. If you’re unfamiliar, you essentially create a *.example.com subdomain pointing to a given IP. Then foo.example.com, which doesn’t have an entry of its own, will resolve to that same IP. So will bar.example.com, you get the idea. Why is this useful? Because then we can use it in our web server to make sure that traffic ends up in the right place. We’ll get to that in a minute.

Somewhere you can dynamically deploy

This might look like docker to you. Or a kubernetes cluster. For me, it’s a single LXD container configured for my project (the right version of Ruby, etc.) and using bundler in --deployment mode to put all the gems in a single tree instead of installing them on the system. Then I can install different branches of the same project in different directories using capistrano, which we’ll cover in a minute.

Get on with it

Configuring the project

So we need to deploy the same project multiple times to the same infrastructure. This is a perfect use-case for docker, but that doesn’t run well within LXD (update: it does nowadays if you enable nesting), so I was limited to using a single container. Since I wanted to keep each instance isolated, and also because they didn’t need to be particularly performant, I didn’t want to have to deal with a shared, server-based database, with different credentials depending on instance, etc. In other words, I decided that the Rails environment I wanted to use was not going to be production, where I use PostgreSQL. So I created a new environment called “review” in config/environments/. It’s really just a copy of the “production” environment, but it allows us to specify a database for that environment in config/database.yml that looks like this:

review:

<<: *default

database: db/review.sqlite3

Now our database is specific to that instance. We’ll also need a secret key base for that environment in config/secrets.yml:

review:

secret_key_base: 9c9cdd6<snip>dd5667b

Of course for production this should be in the environment rather than being committed, but I’m okay with this being hard-coded for review.

Configuring the web server

So we have a dynamic DNS pointing to an LXD container nearly setup for dynamic deployment. We still need to answer the question: “How do we ensure that traffic to a branch-based URL ends up on that branch’s deployed site?”

Since all deployments for review apps on this project were going to the same container, I configured nginx to be smart about it, and grab the name from the URL in order to figure out where the traffic should go. That configuration looked like this:

server {

listen 80;

server_name ~^(www\.)?(?<sname>.+?).example.com$;

root /var/www/$sname/current/public;

location / {

proxy_pass http://unix:/var/www/$sname/shared/tmp/sockets/puma.sock;

proxy_set_header Host $host;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

location ~* ^/assets/ {

expires 1y;

add_header Cache-Control public;

add_header Last-Modified "";

add_header ETag "";

break;

}

access_log /var/log/nginx/$sname-access.log;

error_log /var/log/nginx/reviews-error.log debug;

}

Since *.example.com was already hitting nginx, this made it so visiting foo.example.com tried to proxy to a puma socket in /var/www/foo/shared/tmp/sockets/puma.sock. Of course, if that didn’t exist nginx told you so. Now we just needed a deployment method that actually deployed sites in sub-directories of /var/www/ based on branch name.

Configuring capistrano to deploy the way we need

All my apps are using puma. So to deploy with capistrano, I add the following to the development group of my Gemfile:

gem 'capistrano'

gem 'capistrano-rails'

gem 'capistrano3-puma'

I don’t intend to make this post a capistrano tutorial, there are already several out there. However, assuming you already have it set up, there are three things we need to change from the typical capistrano behavior.

First of all, since we want to use the new “review” environment, make sure you create an environment-specific deploy script in config/deploy/review.rb (just copy the “production” one and modify as necessary). Since I’m using an sqlite database, I want to make sure it’s shared across revisions of the deployment, so in that file I placed code to do exactly that:

append :linked_files, "db/review.sqlite3"

namespace :deploy do

namespace :check do

# The database needs to exist before it's symlinked in or the symlink

# will fail.

before :linked_files, :create_db do

on primary :db do

execute "touch #{shared_path}/db/review.sqlite3"

end

end

end

end

Second, by default, capistrano deploys to /var/www/<application>. capistrano3-puma also uses the application name to determine where to place puma’s socket. So if we can support changing the application name on the fly, we can support the branch-specific nesting that our nginx configuration will be expecting. We could use rake parameters for this, but I liked environment variables better, so in config/deploy.rb I set the application like this:

set :application, ENV.fetch('application', 'example.com')

So it defaults to the production application name, but now I can call cap review deploy application=foo and it’ll deploy to /var/www/foo/, which is exactly what I want.

Third, by default capistrano deploys the master branch. With review apps we want to deploy a specific branch, so that’s another line in config/deploy.rb like this:

set :branch, ENV.fetch('branch', 'master')

Still defaulting to master, but now we can call cap review deploy branch=my-branch-name and it’ll deploy the my-branch-name branch instead of master.

Finally, what we’ve discussed so far has a “leak” issue. Every branch that’s pushed will be deployed onto this same container. That deployment stays there, even after the branch has been merged and deleted! Eventually we’ll run out of hard drive space. Thankfully, review apps support stopping once the branch is deleted, we just need to support such a thing. I added a simple undeploy task to config/deploy.rb that looks like this:

namespace :deploy do

task :undeploy do

on roles(:app) do

invoke 'puma:stop'

execute "rm -rf #{fetch(:deploy_to)}"

end

end

end

So then stopping and removing a deployment is as simple as calling cap review deploy:undeploy.

Putting all of this together and using review apps

We now have a Rails project with a “review” environment, a capistrano configuration for deploying that environment, and the basic infrastructure necessary to host the deployments. Now we just need to flip the switch to enable GitLab Review Apps.

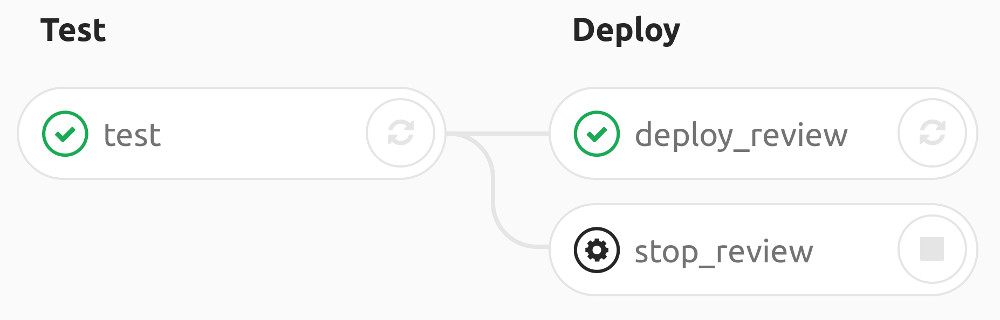

That of course goes into the .gitlab-ci.yml file. You probably already had a deploy stage, you just need to add jobs for deploying and stopping the review apps, which looks like this:

deploy_review:

stage: deploy

tags: [ruby-2.4.1]

script:

- eval "$(ssh-agent -s)"

- ssh-add <(echo -e "$SSH_PRIVATE_KEY")

- bundle exec cap review deploy branch=$CI_COMMIT_REF_NAME application=$CI_COMMIT_REF_SLUG

environment:

name: review/$CI_COMMIT_REF_NAME

url: http://$CI_COMMIT_REF_SLUG.example.com

on_stop: stop_review

only:

- branches

except:

- master

stop_review:

stage: deploy

tags: [ruby-2.4.1]

variables:

GIT_STRATEGY: none

script:

- eval "$(ssh-agent -s)"

- ssh-add <(echo -e "$SSH_PRIVATE_KEY")

- bundle exec cap review deploy:undeploy application=$CI_COMMIT_REF_SLUG

environment:

name: review/$CI_COMMIT_REF_NAME

action: stop

when: manual

Let’s walk through the deployment job first, piece by piece.

deploy_review:

stage: deploy

tags: [ruby-2.4.1]

This defines the job, putting it into the deploy stage (which in my pipeline comes after test). It needs a runner with Ruby v2.4.1 on it, so I tag it as such.

script:

- eval "$(ssh-agent -s)"

- ssh-add <(echo -e "$SSH_PRIVATE_KEY")

- bundle exec cap review deploy branch=$CI_COMMIT_REF_NAME application=$CI_COMMIT_REF_SLUG

This is where the rubber meets the road. Capistrano works over SSH, so I have a private key saved as a variable in GitLab that I load into ssh-agent and then deploy using the $branch and $application environment variables we discussed earlier. I use $CI_COMMIT_REF_SLUG for the application name to ensure that it’s safe for use in a URL.

environment:

name: review/$CI_COMMIT_REF_NAME

url: http://$CI_COMMIT_REF_SLUG.example.com

on_stop: stop_review

Here’s the dynamic environment. Note how the name and url both use the branch name (although the url uses the slug to ensure it’s safe for use in a URL). This is the URL that will be shown on a merge request to view that deployment. Finally, we specify the job that needs to run in order to stop this deployment, stop_review. GitLab will run this job automatically once the branch is deleted. Let’s take a look at that job now, piece by piece.

stop_review:

stage: deploy

tags: [ruby-2.4.1]

variables:

GIT_STRATEGY: none

Here again we name the job, and specify that it requires a runner with Ruby v2.4.1. We also specify GIT_STRATEGY: none so the runner doesn’t try to check out code that was deleted. This is a little odd to me since I’m still able to use capistrano here, so I wonder if I’m taking advantage of the fact that this ends up running on the same runner and the code is already there. I need to investigate that further, but this works for now.

script:

- eval "$(ssh-agent -s)"

- ssh-add <(echo -e "$SSH_PRIVATE_KEY")

- bundle exec cap review deploy:undeploy application=$CI_COMMIT_REF_SLUG

As before, we load the SSH key into ssh-agent, and then execute our undeploy job with the same application variable to make sure we blow away the right one.

environment:

name: review/$CI_COMMIT_REF_NAME

action: stop

when: manual

Note that this environment name corresponds to the deploy_review job as well, and the action is stop, which tells GitLab this is how it stops that environment. We specify that this is a manual action so it doesn’t happen when we push, but it’s still automatic since we specified this job name in the on_stop of the initial deployment.

Conclusion

It took me a while to distill the documentation down into those steps, but once you’ve gone through the process you realize that it makes sense and it’s easy to do again. This has drastically sped up my development time as reviews can now happen asynchronously and simultaneously. I really can’t recommend it enough, and I hope this post helps you accomplish the same thing!